https://www.kaggle.com/code/emilyjiminroh/cnn-fashion-mnist

Import 라이브러리

In [1]:

import sys

import os

In [2]:

from keras.datasets import mnist

from keras.utils import np_utils # 원-핫 인코딩. np_utils.to_categorical(클래스, 클래스의 개수)

In [3]:

from keras.models import Sequential

from keras.layers import Dense, Dropout, Flatten, Conv2D, MaxPooling2D

from keras.callbacks import ModelCheckpoint, EarlyStopping

In [4]:

linkcode

import pandas as pd

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

import seaborn as sns

데이터 살피기

In [5]:

train = pd.read_csv('../input/fashionmnist/fashion-mnist_train.csv')

test = pd.read_csv('../input/fashionmnist/fashion-mnist_test.csv')

In [6]:

train.head()

Out[6]:

labelpixel1pixel2pixel3pixel4pixel5pixel6pixel7pixel8pixel9...pixel775pixel776pixel777pixel778pixel779pixel780pixel781pixel782pixel783pixel78401234

| 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 9 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 6 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 5 | 0 | ... | 0 | 0 | 0 | 30 | 43 | 0 | 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 | 1 | 2 | 0 | 0 | 0 | 0 | ... | 3 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 |

| 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

5 rows × 785 columns

In [7]:

train.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 60000 entries, 0 to 59999

Columns: 785 entries, label to pixel784

dtypes: int64(785)

memory usage: 359.3 MB

In [8]:

test.head()

Out[8]:

labelpixel1pixel2pixel3pixel4pixel5pixel6pixel7pixel8pixel9...pixel775pixel776pixel777pixel778pixel779pixel780pixel781pixel782pixel783pixel78401234

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 9 | 8 | ... | 103 | 87 | 56 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 34 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 2 | 0 | 0 | 0 | 0 | 0 | 0 | 14 | 53 | 99 | ... | 0 | 0 | 0 | 0 | 63 | 53 | 31 | 0 | 0 | 0 |

| 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 137 | 126 | 140 | 0 | 133 | 224 | 222 | 56 | 0 | 0 |

| 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

5 rows × 785 columns

In [9]:

test.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 10000 entries, 0 to 9999

Columns: 785 entries, label to pixel784

dtypes: int64(785)

memory usage: 59.9 MB

- label과 pixel이 함께 있다. => 분리해야 함!!

Label 분리

In [10]:

X_train = train.drop(["label"],axis=1)

Y_train = train["label"]

In [11]:

X_test = test.drop(["label"],axis=1)

Y_test = test["label"]

In [12]:

X_train.values[0]

Out[12]:

array([ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 4, 0, 0,

0, 0, 0, 62, 61, 21, 29, 23, 51, 136, 61, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 88, 201, 228, 225, 255, 115, 62, 137, 255, 235, 222,

255, 135, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 47, 252, 234, 238, 224, 215, 215, 229, 108, 180, 207,

214, 224, 231, 249, 254, 45, 0, 0, 0, 0, 0, 0, 0,

0, 1, 0, 0, 214, 222, 210, 213, 224, 225, 217, 220, 254,

233, 219, 221, 217, 223, 221, 240, 254, 0, 0, 1, 0, 0,

0, 1, 0, 0, 0, 128, 237, 207, 224, 224, 207, 216, 214,

210, 208, 211, 221, 208, 219, 213, 226, 211, 237, 150, 0, 0,

0, 0, 0, 0, 2, 0, 0, 237, 222, 215, 207, 210, 212,

213, 206, 214, 213, 214, 213, 210, 215, 214, 206, 199, 218, 255,

13, 0, 2, 0, 0, 0, 4, 0, 85, 228, 210, 218, 200,

211, 208, 203, 215, 210, 209, 209, 210, 213, 211, 210, 217, 206,

213, 231, 175, 0, 0, 0, 0, 0, 0, 0, 217, 224, 215,

206, 205, 204, 217, 230, 222, 215, 224, 233, 228, 232, 228, 224,

207, 212, 215, 213, 229, 31, 0, 4, 0, 1, 0, 21, 225,

212, 212, 203, 211, 225, 193, 139, 136, 195, 147, 156, 139, 128,

162, 197, 223, 207, 220, 213, 232, 177, 0, 0, 0, 0, 0,

123, 226, 207, 211, 209, 205, 228, 158, 90, 103, 186, 138, 100,

121, 147, 158, 183, 226, 208, 214, 209, 216, 255, 13, 0, 1,

0, 0, 226, 219, 202, 208, 206, 205, 216, 184, 156, 150, 193,

170, 164, 168, 188, 186, 200, 219, 216, 213, 213, 211, 233, 148,

0, 0, 0, 45, 227, 204, 214, 211, 218, 222, 221, 230, 229,

221, 213, 224, 233, 226, 220, 219, 221, 224, 223, 217, 210, 218,

213, 254, 0, 0, 0, 157, 226, 203, 207, 211, 209, 215, 205,

198, 207, 208, 201, 201, 197, 203, 205, 210, 207, 213, 214, 214,

214, 213, 208, 234, 107, 0, 0, 235, 213, 204, 211, 210, 209,

213, 202, 197, 204, 215, 217, 213, 212, 210, 206, 212, 203, 211,

218, 215, 214, 208, 209, 222, 230, 0, 52, 255, 207, 200, 208,

213, 210, 210, 208, 207, 202, 201, 209, 216, 216, 216, 216, 214,

212, 205, 215, 201, 228, 208, 214, 212, 218, 25, 118, 217, 201,

206, 208, 213, 208, 205, 206, 210, 211, 202, 199, 207, 208, 209,

210, 207, 210, 210, 245, 139, 119, 255, 202, 203, 236, 114, 171,

238, 212, 203, 220, 216, 217, 209, 207, 205, 210, 211, 206, 204,

206, 209, 211, 215, 210, 206, 221, 242, 0, 224, 234, 230, 181,

26, 39, 145, 201, 255, 157, 115, 250, 200, 207, 206, 207, 213,

216, 206, 205, 206, 207, 206, 215, 207, 221, 238, 0, 0, 188,

85, 0, 0, 0, 0, 0, 31, 0, 129, 253, 190, 207, 208,

208, 208, 209, 211, 211, 209, 209, 209, 212, 201, 226, 165, 0,

0, 0, 0, 0, 0, 2, 0, 0, 0, 0, 89, 254, 199,

199, 192, 196, 198, 199, 201, 202, 203, 204, 203, 203, 200, 222,

155, 0, 3, 3, 3, 2, 0, 0, 0, 1, 5, 0, 0,

255, 218, 226, 232, 228, 224, 222, 220, 219, 219, 217, 221, 220,

212, 236, 95, 0, 2, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 155, 194, 168, 170, 171, 173, 173, 179, 177, 175, 172,

171, 167, 161, 180, 0, 0, 1, 0, 1, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0])In [13]:

Y_train

Out[13]:

0 2

1 9

2 6

3 0

4 3

..

59995 9

59996 1

59997 8

59998 8

59999 7

Name: label, Length: 60000, dtype: int64- train/test 모두 X와 Y로 분리 완료!!

X 데이터 전처리

- type 확인 -> to_numpy()

In [14]:

type(X_train)

Out[14]:

pandas.core.frame.DataFrameIn [15]:

type(X_test)

Out[15]:

pandas.core.frame.DataFrameIn [16]:

X_test = X_test.to_numpy()

In [17]:

X_train = X_train.to_numpy()

In [18]:

type(X_train)

Out[18]:

numpy.ndarrayIn [19]:

type(X_test)

Out[19]:

numpy.ndarray- reshape() 1차원으로 -> float64 -> 255로 나눠 normalize

In [20]:

X_train = X_train.reshape(X_train.shape[0], 28,28,1).astype('float64') / 255

In [21]:

X_test = X_test.reshape(X_test.shape[0], 28,28,1).astype('float64') / 255

- 정규화 확인

In [22]:

print(X_train[0])

[[[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0. ]

[0. ]

[0.01568627]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0.24313725]

[0.23921569]

[0.08235294]

[0.11372549]

[0.09019608]

[0.2 ]

[0.53333333]

[0.23921569]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0.34509804]

[0.78823529]

[0.89411765]

[0.88235294]

[1. ]

[0.45098039]

[0.24313725]

[0.5372549 ]

[1. ]

[0.92156863]

[0.87058824]

[1. ]

[0.52941176]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0.18431373]

[0.98823529]

[0.91764706]

[0.93333333]

[0.87843137]

[0.84313725]

[0.84313725]

[0.89803922]

[0.42352941]

[0.70588235]

[0.81176471]

[0.83921569]

[0.87843137]

[0.90588235]

[0.97647059]

[0.99607843]

[0.17647059]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0.00392157]

[0. ]

[0. ]

[0.83921569]

[0.87058824]

[0.82352941]

[0.83529412]

[0.87843137]

[0.88235294]

[0.85098039]

[0.8627451 ]

[0.99607843]

[0.91372549]

[0.85882353]

[0.86666667]

[0.85098039]

[0.8745098 ]

[0.86666667]

[0.94117647]

[0.99607843]

[0. ]

[0. ]

[0.00392157]

[0. ]

[0. ]

[0. ]]

[[0.00392157]

[0. ]

[0. ]

[0. ]

[0.50196078]

[0.92941176]

[0.81176471]

[0.87843137]

[0.87843137]

[0.81176471]

[0.84705882]

[0.83921569]

[0.82352941]

[0.81568627]

[0.82745098]

[0.86666667]

[0.81568627]

[0.85882353]

[0.83529412]

[0.88627451]

[0.82745098]

[0.92941176]

[0.58823529]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]]

[[0. ]

[0.00784314]

[0. ]

[0. ]

[0.92941176]

[0.87058824]

[0.84313725]

[0.81176471]

[0.82352941]

[0.83137255]

[0.83529412]

[0.80784314]

[0.83921569]

[0.83529412]

[0.83921569]

[0.83529412]

[0.82352941]

[0.84313725]

[0.83921569]

[0.80784314]

[0.78039216]

[0.85490196]

[1. ]

[0.05098039]

[0. ]

[0.00784314]

[0. ]

[0. ]]

[[0. ]

[0.01568627]

[0. ]

[0.33333333]

[0.89411765]

[0.82352941]

[0.85490196]

[0.78431373]

[0.82745098]

[0.81568627]

[0.79607843]

[0.84313725]

[0.82352941]

[0.81960784]

[0.81960784]

[0.82352941]

[0.83529412]

[0.82745098]

[0.82352941]

[0.85098039]

[0.80784314]

[0.83529412]

[0.90588235]

[0.68627451]

[0. ]

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0. ]

[0.85098039]

[0.87843137]

[0.84313725]

[0.80784314]

[0.80392157]

[0.8 ]

[0.85098039]

[0.90196078]

[0.87058824]

[0.84313725]

[0.87843137]

[0.91372549]

[0.89411765]

[0.90980392]

[0.89411765]

[0.87843137]

[0.81176471]

[0.83137255]

[0.84313725]

[0.83529412]

[0.89803922]

[0.12156863]

[0. ]

[0.01568627]

[0. ]]

[[0.00392157]

[0. ]

[0.08235294]

[0.88235294]

[0.83137255]

[0.83137255]

[0.79607843]

[0.82745098]

[0.88235294]

[0.75686275]

[0.54509804]

[0.53333333]

[0.76470588]

[0.57647059]

[0.61176471]

[0.54509804]

[0.50196078]

[0.63529412]

[0.77254902]

[0.8745098 ]

[0.81176471]

[0.8627451 ]

[0.83529412]

[0.90980392]

[0.69411765]

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0.48235294]

[0.88627451]

[0.81176471]

[0.82745098]

[0.81960784]

[0.80392157]

[0.89411765]

[0.61960784]

[0.35294118]

[0.40392157]

[0.72941176]

[0.54117647]

[0.39215686]

[0.4745098 ]

[0.57647059]

[0.61960784]

[0.71764706]

[0.88627451]

[0.81568627]

[0.83921569]

[0.81960784]

[0.84705882]

[1. ]

[0.05098039]

[0. ]

[0.00392157]]

[[0. ]

[0. ]

[0.88627451]

[0.85882353]

[0.79215686]

[0.81568627]

[0.80784314]

[0.80392157]

[0.84705882]

[0.72156863]

[0.61176471]

[0.58823529]

[0.75686275]

[0.66666667]

[0.64313725]

[0.65882353]

[0.7372549 ]

[0.72941176]

[0.78431373]

[0.85882353]

[0.84705882]

[0.83529412]

[0.83529412]

[0.82745098]

[0.91372549]

[0.58039216]

[0. ]

[0. ]]

[[0. ]

[0.17647059]

[0.89019608]

[0.8 ]

[0.83921569]

[0.82745098]

[0.85490196]

[0.87058824]

[0.86666667]

[0.90196078]

[0.89803922]

[0.86666667]

[0.83529412]

[0.87843137]

[0.91372549]

[0.88627451]

[0.8627451 ]

[0.85882353]

[0.86666667]

[0.87843137]

[0.8745098 ]

[0.85098039]

[0.82352941]

[0.85490196]

[0.83529412]

[0.99607843]

[0. ]

[0. ]]

[[0. ]

[0.61568627]

[0.88627451]

[0.79607843]

[0.81176471]

[0.82745098]

[0.81960784]

[0.84313725]

[0.80392157]

[0.77647059]

[0.81176471]

[0.81568627]

[0.78823529]

[0.78823529]

[0.77254902]

[0.79607843]

[0.80392157]

[0.82352941]

[0.81176471]

[0.83529412]

[0.83921569]

[0.83921569]

[0.83921569]

[0.83529412]

[0.81568627]

[0.91764706]

[0.41960784]

[0. ]]

[[0. ]

[0.92156863]

[0.83529412]

[0.8 ]

[0.82745098]

[0.82352941]

[0.81960784]

[0.83529412]

[0.79215686]

[0.77254902]

[0.8 ]

[0.84313725]

[0.85098039]

[0.83529412]

[0.83137255]

[0.82352941]

[0.80784314]

[0.83137255]

[0.79607843]

[0.82745098]

[0.85490196]

[0.84313725]

[0.83921569]

[0.81568627]

[0.81960784]

[0.87058824]

[0.90196078]

[0. ]]

[[0.20392157]

[1. ]

[0.81176471]

[0.78431373]

[0.81568627]

[0.83529412]

[0.82352941]

[0.82352941]

[0.81568627]

[0.81176471]

[0.79215686]

[0.78823529]

[0.81960784]

[0.84705882]

[0.84705882]

[0.84705882]

[0.84705882]

[0.83921569]

[0.83137255]

[0.80392157]

[0.84313725]

[0.78823529]

[0.89411765]

[0.81568627]

[0.83921569]

[0.83137255]

[0.85490196]

[0.09803922]]

[[0.4627451 ]

[0.85098039]

[0.78823529]

[0.80784314]

[0.81568627]

[0.83529412]

[0.81568627]

[0.80392157]

[0.80784314]

[0.82352941]

[0.82745098]

[0.79215686]

[0.78039216]

[0.81176471]

[0.81568627]

[0.81960784]

[0.82352941]

[0.81176471]

[0.82352941]

[0.82352941]

[0.96078431]

[0.54509804]

[0.46666667]

[1. ]

[0.79215686]

[0.79607843]

[0.9254902 ]

[0.44705882]]

[[0.67058824]

[0.93333333]

[0.83137255]

[0.79607843]

[0.8627451 ]

[0.84705882]

[0.85098039]

[0.81960784]

[0.81176471]

[0.80392157]

[0.82352941]

[0.82745098]

[0.80784314]

[0.8 ]

[0.80784314]

[0.81960784]

[0.82745098]

[0.84313725]

[0.82352941]

[0.80784314]

[0.86666667]

[0.94901961]

[0. ]

[0.87843137]

[0.91764706]

[0.90196078]

[0.70980392]

[0.10196078]]

[[0.15294118]

[0.56862745]

[0.78823529]

[1. ]

[0.61568627]

[0.45098039]

[0.98039216]

[0.78431373]

[0.81176471]

[0.80784314]

[0.81176471]

[0.83529412]

[0.84705882]

[0.80784314]

[0.80392157]

[0.80784314]

[0.81176471]

[0.80784314]

[0.84313725]

[0.81176471]

[0.86666667]

[0.93333333]

[0. ]

[0. ]

[0.7372549 ]

[0.33333333]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0. ]

[0.12156863]

[0. ]

[0.50588235]

[0.99215686]

[0.74509804]

[0.81176471]

[0.81568627]

[0.81568627]

[0.81568627]

[0.81960784]

[0.82745098]

[0.82745098]

[0.81960784]

[0.81960784]

[0.81960784]

[0.83137255]

[0.78823529]

[0.88627451]

[0.64705882]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]]

[[0.00784314]

[0. ]

[0. ]

[0. ]

[0. ]

[0.34901961]

[0.99607843]

[0.78039216]

[0.78039216]

[0.75294118]

[0.76862745]

[0.77647059]

[0.78039216]

[0.78823529]

[0.79215686]

[0.79607843]

[0.8 ]

[0.79607843]

[0.79607843]

[0.78431373]

[0.87058824]

[0.60784314]

[0. ]

[0.01176471]

[0.01176471]

[0.01176471]

[0.00784314]

[0. ]]

[[0. ]

[0. ]

[0.00392157]

[0.01960784]

[0. ]

[0. ]

[1. ]

[0.85490196]

[0.88627451]

[0.90980392]

[0.89411765]

[0.87843137]

[0.87058824]

[0.8627451 ]

[0.85882353]

[0.85882353]

[0.85098039]

[0.86666667]

[0.8627451 ]

[0.83137255]

[0.9254902 ]

[0.37254902]

[0. ]

[0.00784314]

[0. ]

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0.60784314]

[0.76078431]

[0.65882353]

[0.66666667]

[0.67058824]

[0.67843137]

[0.67843137]

[0.70196078]

[0.69411765]

[0.68627451]

[0.6745098 ]

[0.67058824]

[0.65490196]

[0.63137255]

[0.70588235]

[0. ]

[0. ]

[0.00392157]

[0. ]

[0.00392157]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]

[0. ]]]

In [23]:

print(X_test)

[[[[0. ]

[0. ]

[0. ]

...

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0.01568627]

...

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0.00392157]

...

[0. ]

[0. ]

[0. ]]

...

[[0. ]

[0. ]

[0. ]

...

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0. ]

...

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0. ]

...

[0. ]

[0. ]

[0. ]]]

[[[0. ]

[0. ]

[0. ]

...

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0. ]

...

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0. ]

...

[0. ]

[0. ]

[0. ]]

...

[[0. ]

[0. ]

[0. ]

...

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0. ]

...

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0. ]

...

[0. ]

[0. ]

[0. ]]]

[[[0. ]

[0. ]

[0. ]

...

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0. ]

...

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0. ]

...

[0. ]

[0. ]

[0. ]]

...

[[0. ]

[0. ]

[0. ]

...

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0. ]

...

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0. ]

...

[0. ]

[0. ]

[0. ]]]

...

[[[0. ]

[0. ]

[0. ]

...

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0. ]

...

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0. ]

...

[0. ]

[0. ]

[0. ]]

...

[[0. ]

[0. ]

[0.01176471]

...

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0.00784314]

...

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0. ]

...

[0. ]

[0.00392157]

[0. ]]]

[[[0. ]

[0.00392157]

[0.01176471]

...

[0. ]

[0.00784314]

[0. ]]

[[0. ]

[0. ]

[0. ]

...

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0.45490196]

...

[0.78431373]

[0.11372549]

[0. ]]

...

[[0. ]

[0. ]

[0.01568627]

...

[0. ]

[0.01568627]

[0. ]]

[[0. ]

[0. ]

[0. ]

...

[0.00784314]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0. ]

...

[0. ]

[0. ]

[0. ]]]

[[[0. ]

[0. ]

[0. ]

...

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0. ]

...

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0. ]

...

[0. ]

[0. ]

[0. ]]

...

[[0. ]

[0. ]

[0. ]

...

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0. ]

...

[0. ]

[0. ]

[0. ]]

[[0. ]

[0. ]

[0. ]

...

[0. ]

[0. ]

[0. ]]]]

Y 데이터 바이너리화 (원-핫 인코딩)

- class 확인하기

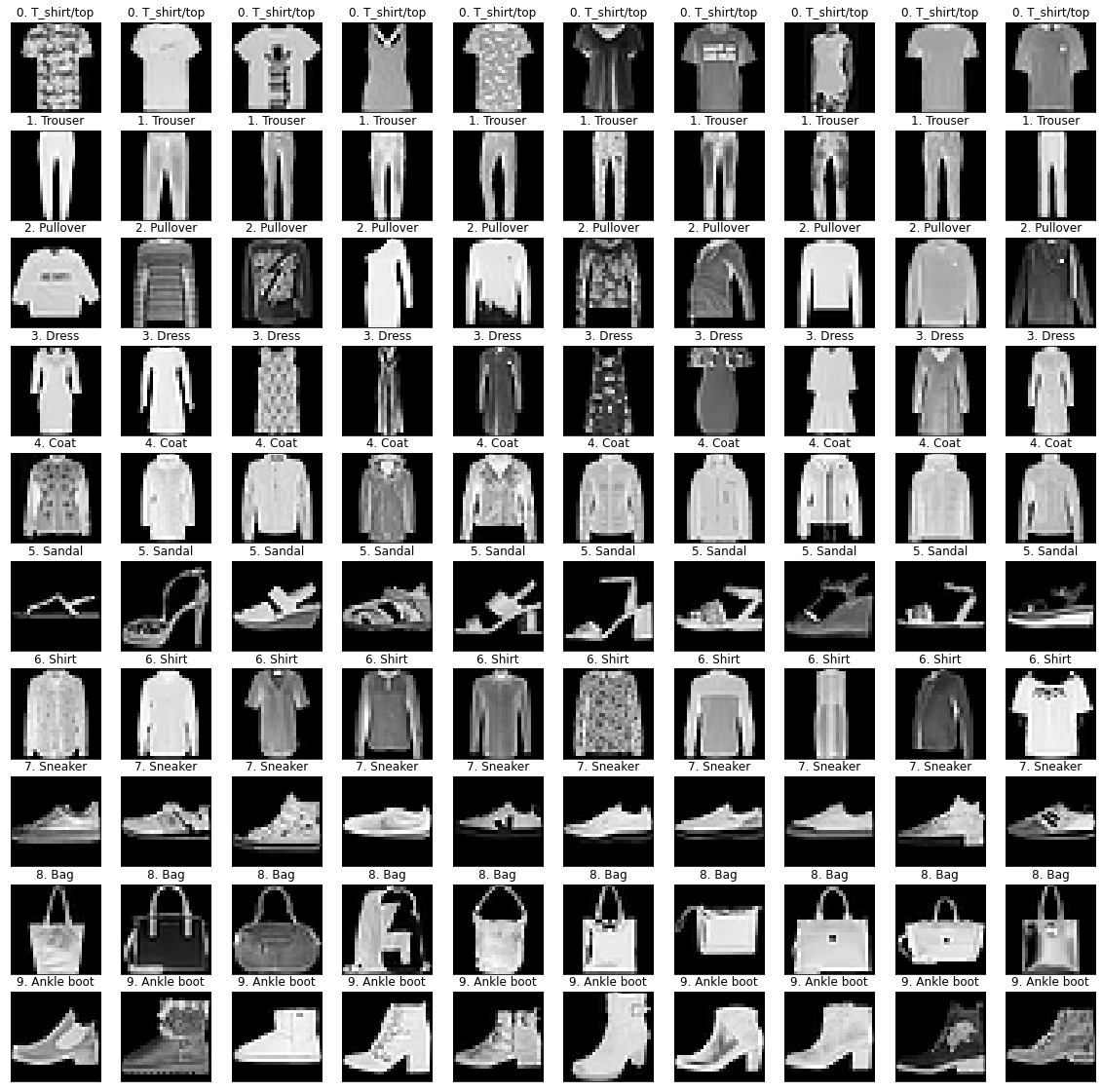

<총 10개의 class> 0: T-shirt/top\ 1: Trouser\ 2: Pullover\ 3: Dress\ 4: Coat\ 5: Sandal\ 6: Shirt\ 7: Sneaker\ 8: Bag\ 9: Ankle boot

In [24]:

class_names = ['T_shirt/top', 'Trouser', 'Pullover', 'Dress', 'Coat',

'Sandal', 'Shirt', 'Sneaker', 'Bag', 'Ankle boot']

plt.figure(figsize=(20, 20))

for k in range(10):

XX = X_train[Y_train == k]

YY = Y_train[Y_train == k].reset_index()['label']

for i in range(10):

plt.subplot(10, 10, k*10 + i + 1)

plt.xticks([])

plt.yticks([])

plt.grid(False)

plt.imshow(XX[i][:,:,0], cmap='gray')

label_index = int(YY[i])

plt.title('{}. {}'.format(k, class_names[label_index]))

plt.show()

In [25]:

Y_train = np_utils.to_categorical(Y_train, 10)

Y_test = np_utils.to_categorical(Y_test, 10)

In [26]:

print(Y_train[0])

[0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]

In [27]:

print(Y_test)

[[1. 0. 0. ... 0. 0. 0.]

[0. 1. 0. ... 0. 0. 0.]

[0. 0. 1. ... 0. 0. 0.]

...

[0. 0. 0. ... 0. 1. 0.]

[0. 0. 0. ... 0. 1. 0.]

[0. 1. 0. ... 0. 0. 0.]]

CNN 모델

In [28]:

# 컨볼루션 신경망 설정

model = Sequential()

model.add(Conv2D(32, kernel_size=(3, 3), input_shape = (28, 28, 1), activation='relu')) # 다름

model.add(Conv2D(64, (3, 3), activation='relu'))

model.add(MaxPooling2D(pool_size=2))

model.add(Dropout(0.25))

model.add(Flatten())

model.add(Dense(128, activation='relu'))

model.add(Dropout(0.5))

model.add(Dense(10, activation='softmax'))

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

2022-02-15 08:09:48.668516: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2022-02-15 08:09:48.744371: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2022-02-15 08:09:48.745080: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2022-02-15 08:09:48.746180: I tensorflow/core/platform/cpu_feature_guard.cc:142] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 AVX512F FMA

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

2022-02-15 08:09:48.747363: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2022-02-15 08:09:48.748012: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2022-02-15 08:09:48.748691: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2022-02-15 08:09:50.315065: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2022-02-15 08:09:50.315921: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2022-02-15 08:09:50.316562: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2022-02-15 08:09:50.317133: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1510] Created device /job:localhost/replica:0/task:0/device:GPU:0 with 15403 MB memory: -> device: 0, name: Tesla P100-PCIE-16GB, pci bus id: 0000:00:04.0, compute capability: 6.0

In [29]:

model.summary()

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 26, 26, 32) 320

_________________________________________________________________

conv2d_1 (Conv2D) (None, 24, 24, 64) 18496

_________________________________________________________________

max_pooling2d (MaxPooling2D) (None, 12, 12, 64) 0

_________________________________________________________________

dropout (Dropout) (None, 12, 12, 64) 0

_________________________________________________________________

flatten (Flatten) (None, 9216) 0

_________________________________________________________________

dense (Dense) (None, 128) 1179776

_________________________________________________________________

dropout_1 (Dropout) (None, 128) 0

_________________________________________________________________

dense_1 (Dense) (None, 10) 1290

=================================================================

Total params: 1,199,882

Trainable params: 1,199,882

Non-trainable params: 0

_________________________________________________________________

- 모델 최적화

In [30]:

# 모델 최적화 설정

MODEL_DIR = './model/'

if not os.path.exists(MODEL_DIR):

os.mkdir(MODEL_DIR)

modelpath='./model/{epoch:02d}-{val_loss:.4f}.hdf5'

checkpointer = ModelCheckpoint(filepath=modelpath, monitor='val_loss', verbose=1, save_best_only=True)

early_stopping_callback = EarlyStopping(monitor='val_loss', patience=10)

In [31]:

# 모델의 실행

history = model.fit(X_train, Y_train, validation_data=(X_test, Y_test), epochs=30, batch_size=200, verbose=0, callbacks=[early_stopping_callback, checkpointer])

2022-02-15 08:09:51.615996: I tensorflow/compiler/mlir/mlir_graph_optimization_pass.cc:185] None of the MLIR Optimization Passes are enabled (registered 2)

2022-02-15 08:09:52.761190: I tensorflow/stream_executor/cuda/cuda_dnn.cc:369] Loaded cuDNN version 8005

Epoch 00001: val_loss improved from inf to 0.32456, saving model to ./model/01-0.3246.hdf5

Epoch 00002: val_loss improved from 0.32456 to 0.26909, saving model to ./model/02-0.2691.hdf5

Epoch 00003: val_loss improved from 0.26909 to 0.24979, saving model to ./model/03-0.2498.hdf5

Epoch 00004: val_loss improved from 0.24979 to 0.23318, saving model to ./model/04-0.2332.hdf5

Epoch 00005: val_loss improved from 0.23318 to 0.22127, saving model to ./model/05-0.2213.hdf5

Epoch 00006: val_loss improved from 0.22127 to 0.20935, saving model to ./model/06-0.2093.hdf5

Epoch 00007: val_loss improved from 0.20935 to 0.20668, saving model to ./model/07-0.2067.hdf5

Epoch 00008: val_loss improved from 0.20668 to 0.20279, saving model to ./model/08-0.2028.hdf5

Epoch 00009: val_loss did not improve from 0.20279

Epoch 00010: val_loss improved from 0.20279 to 0.19836, saving model to ./model/10-0.1984.hdf5

Epoch 00011: val_loss did not improve from 0.19836

Epoch 00012: val_loss improved from 0.19836 to 0.19533, saving model to ./model/12-0.1953.hdf5

Epoch 00013: val_loss did not improve from 0.19533

Epoch 00014: val_loss did not improve from 0.19533

Epoch 00015: val_loss did not improve from 0.19533

Epoch 00016: val_loss did not improve from 0.19533

Epoch 00017: val_loss did not improve from 0.19533

Epoch 00018: val_loss did not improve from 0.19533

Epoch 00019: val_loss did not improve from 0.19533

Epoch 00020: val_loss did not improve from 0.19533

Epoch 00021: val_loss did not improve from 0.19533

Epoch 00022: val_loss did not improve from 0.19533

In [32]:

# 테스트 정확도 출력

print("\n Test Accuracy: %.4f" % (model.evaluate(X_test, Y_test)[1]))

313/313 [==============================] - 1s 3ms/step - loss: 0.2232 - accuracy: 0.9375

Test Accuracy: 0.9375

- 14번째 에포크에서 최적화 모델 생성 (정확도: 93%)

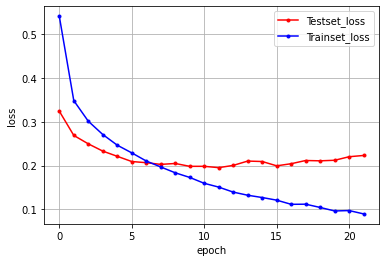

오차 그래프 출력

In [33]:

# 오차 = 1 - 학습셋 정확도

# 테스트 셋의 오차

y_vloss = history.history['val_loss']

# 학습셋의 오차

y_loss = history.history['loss']

# 그래프로 표현

# numpy.arange(n) => 0~n-1를 담은 리스트 생성 (예제 아래)

x_len = np.arange(len(y_loss))

# point marker 형태로 label 명을 붙인 y_vloss, y_loss 그래프를 각각 색상 다르게 생성

plt.plot(x_len, y_vloss, marker='.', c="red", label='Testset_loss')

plt.plot(x_len, y_loss, marker='.', c="blue", label='Trainset_loss')

# 그래프에 그리드를 주고 레이블을 표시

plt.legend(loc='upper right')

# plt.axis([0, 20, 0, 0.35])

plt.grid()

plt.xlabel('epoch')

plt.ylabel('loss')

plt.show()

In [ ]:

'AI & Data Analysis > Kaggle Notebook' 카테고리의 다른 글

| [NLP] SMS Spam Collection Dataset (0) | 2022.06.07 |

|---|---|

| [CNN] Traffic Signs Classification with Explanation (0) | 2022.05.27 |

| [EDA & Visualization] Netflix Dataset (0) | 2022.05.27 |

| [EDA] Home-credit-default-risk Dataset (0) | 2022.05.27 |

| [EDA & Visualization] San_Francisco Data (1) | 2022.03.31 |